AI models are capable of great things. But they alone aren’t enough to give AI agents the necessary structure and reliability. We are solving this problem at its root—creating a new class of systems for trustworthy machine thinking and reasoning.

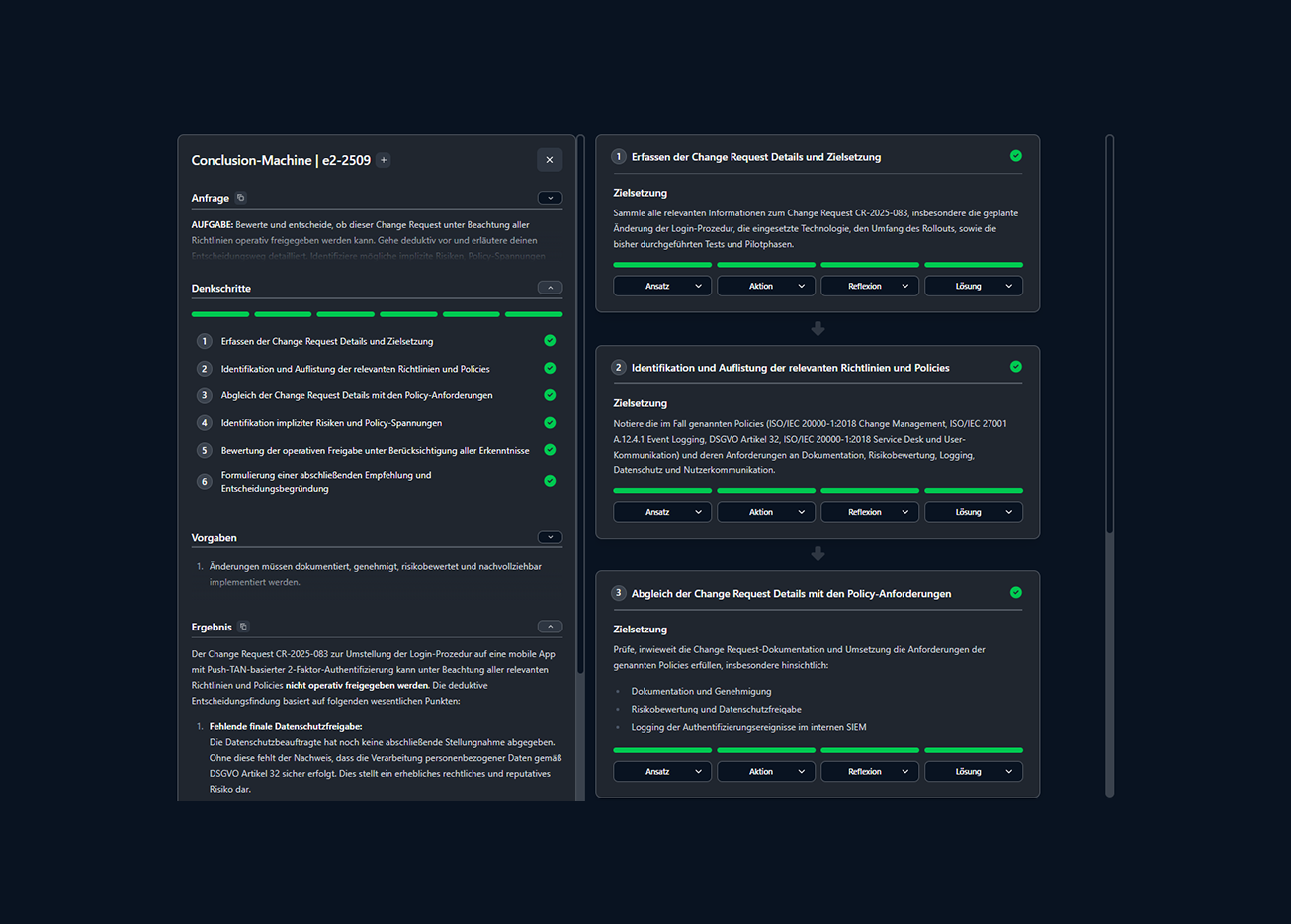

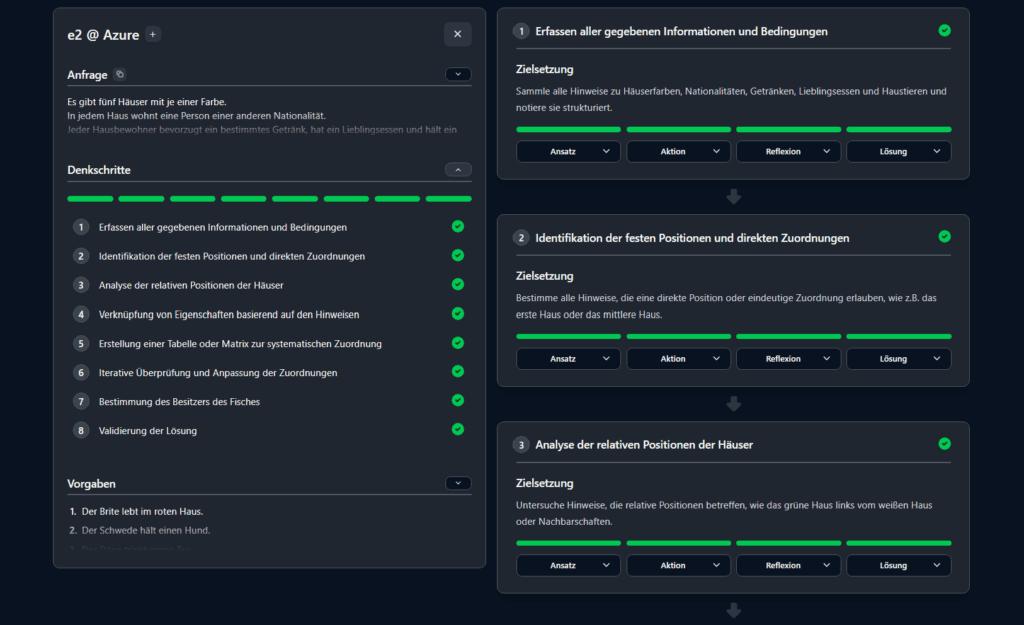

See thought processes unfold in real-time: with comprehensive explanations, logically-reasoned conclusions, and transparent solution paths.

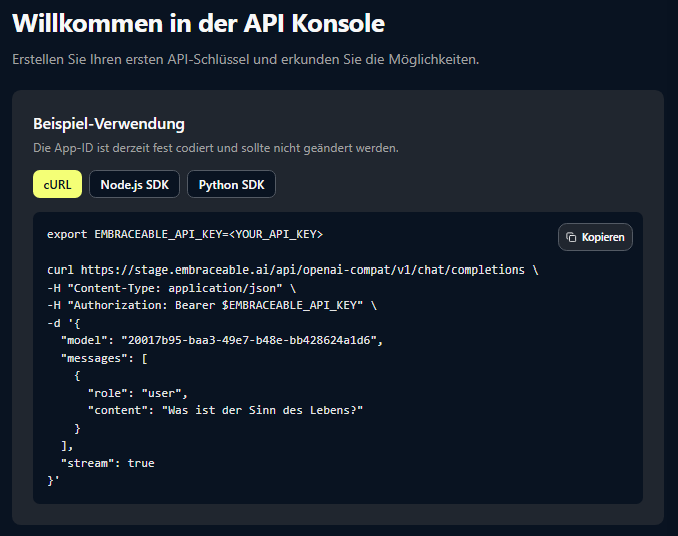

Our API is structurally compatible with the OpenAI SDK. This enables easy migration. Find more information in our documentation.

Gewinner des IONOS AI Project of the Year 2025 Awards →

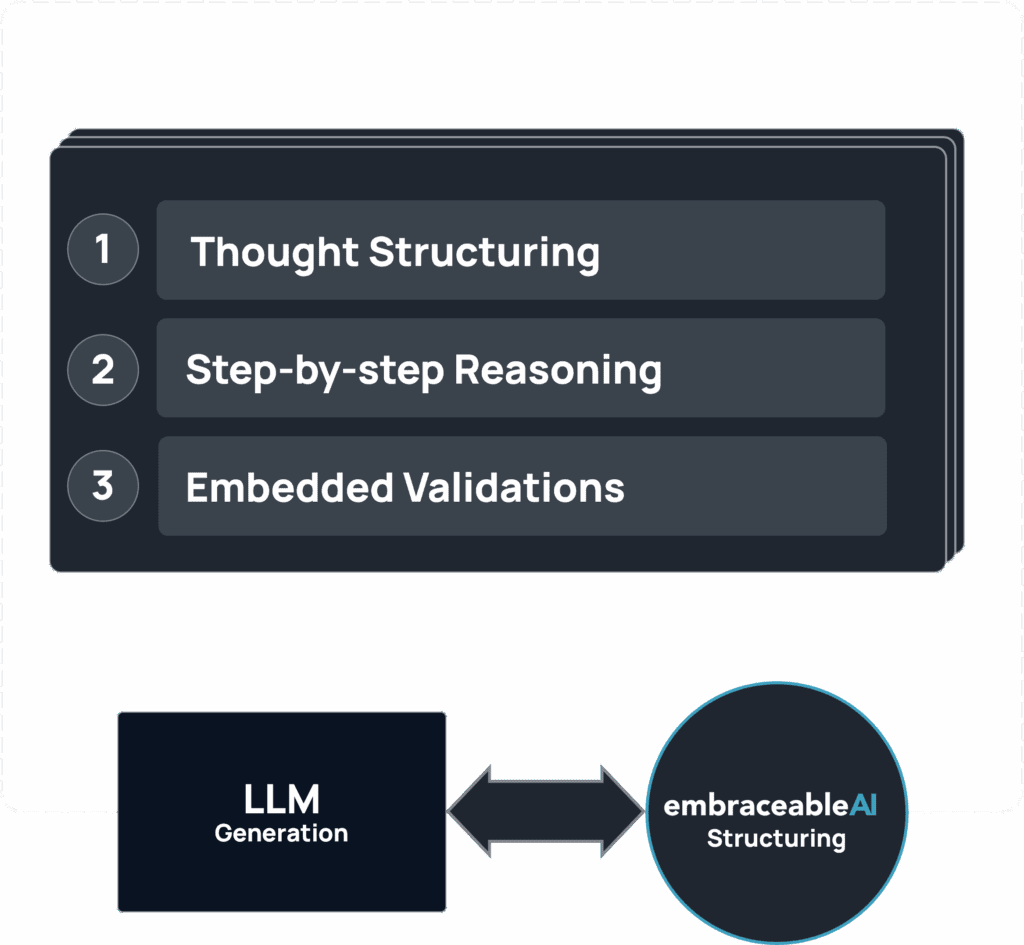

To turn word sequences into high-quality thought processes, what’s needed—beyond the AI models themselves—is an explicit “Thought-Structuring & Process-Guidance Architecture.” An additional layer above the AI models. This is exactly what we have built. You can read more here.

The Foundation for your Sophisticated AI Agents

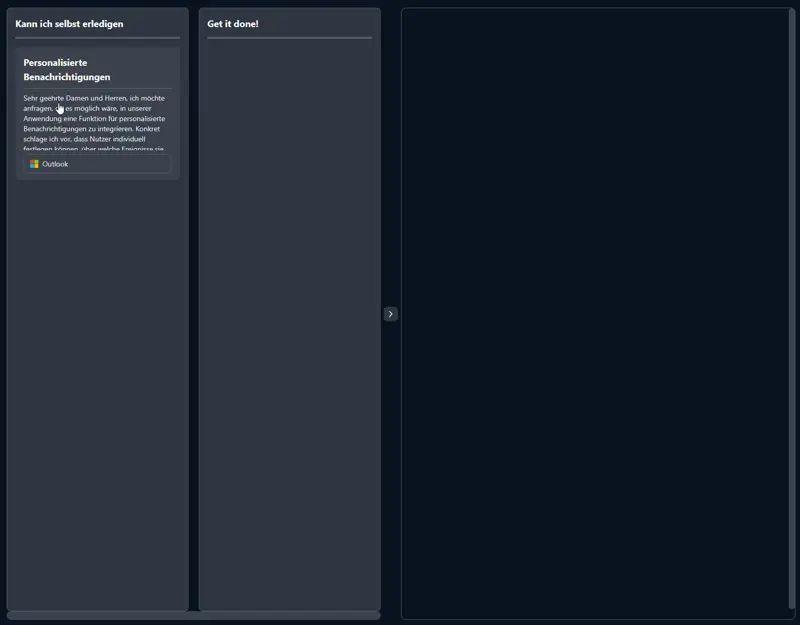

You can input individual tasks into our Web UI to initiate decision-making processes and gain insight into all conclusions.

This allows you to gain trust, control, and reproducible decision quality.

You can input individual tasks into our Web UI to initiate decision-making processes and gain insight into all conclusions.

This allows you to gain trust, control, and reproducible decision quality.

Our Solutions Team supports your projects involving agentic workloads.

Either in collaboration with your tech teams (which we call “Co-Engineering”)—or as complete turnkey solutions.

Wir bieten pro Quartal eine begrenzte Anzahl von Challenges, bei der wir im Rahmen ausgewählter Projekte die Leistungsfähigkeit unserer “Denkmaschinen”-Technologie unter Beweis stellen.

Die Challenge besteht im Kern daraus, dass wir Ihnen in einer abgesicherten Sandbox-Umgebung in maximal 4 Wochen beweisen, Ihre KI-Entscheidungen durch Denkmaschinen-Technologie belastbar und transparent zu bekommen.

Kriterien für eine Bewertung sind u.a.:

LLMs reproduce linguistic patterns from their training data, but they have no concept of thoughts or justifications. Yet, these are the very structural elements needed for the decision logic of sophisticated AI agents. This is why LLMs alone are the wrong starting point for AI agents.

Thinking Machines embed LLMs into an architecture that enables structured thinking and reasoning. The LLM provides semantic coherence; the Thinking Machine generates and guides thought processes, creating conclusions and decisions from them. Simply put: Thinking Machines are LLMs + cognitive architecture for thinking & reasoning.

Guardrails prevent errors after thinking—Thinking Machines prevent them during thinking. They integrate policies, norms, and rules directly into the logical reasoning process, instead of checking them retroactively. That is the difference between external control and internal cognitive control.

Short answer: no. Frameworks orchestrate prompts & co.; Thinking Machines orchestrate thoughts. They create a cognitive layer above LLMs. The goal isn’t integration, but intelligence control: structured decision logic across APIs, models, and data sources.

This makes Thinking Machines a new class of system, not a new shortcut.

Thinking Machines technically separate hypothesis formation from conclusion. They check every deduction for logical consistency and document justifications in a traceable way.

The result is not “probable,” but reasoned. Hallucinations can be identified, explained, and corrected—not hidden.

Yes. You can test Thinking Machines directly in the web interface or integrate them via our API just like a language model.

Both are free to get started and require no integration. The AI’s thinking changes—not your setup.