AI language models demonstrate remarkable performance. However, in long chains of reasoning, they encounter a structural de facto limit of their representational space.

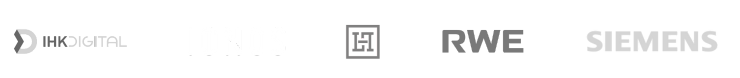

To overcome this limitation, we add a second representation space. This Dual-Space approach enables the structuring and stabilization of long chains of thought—taking the ability of AI to perform structured reasoning work to a new level.

Diese Ebene bildet die sprachliche Oberfläche von Gedanken ab.

Diese Ebene bildet die kognitive Funktion von Gedanken ab.

How they complement each other:The architecture relies on a bidirectional coupling. The Token Space provides the expressive power to generate hypotheses, while the Functional Space provides the structural skeleton to validate and organize them.

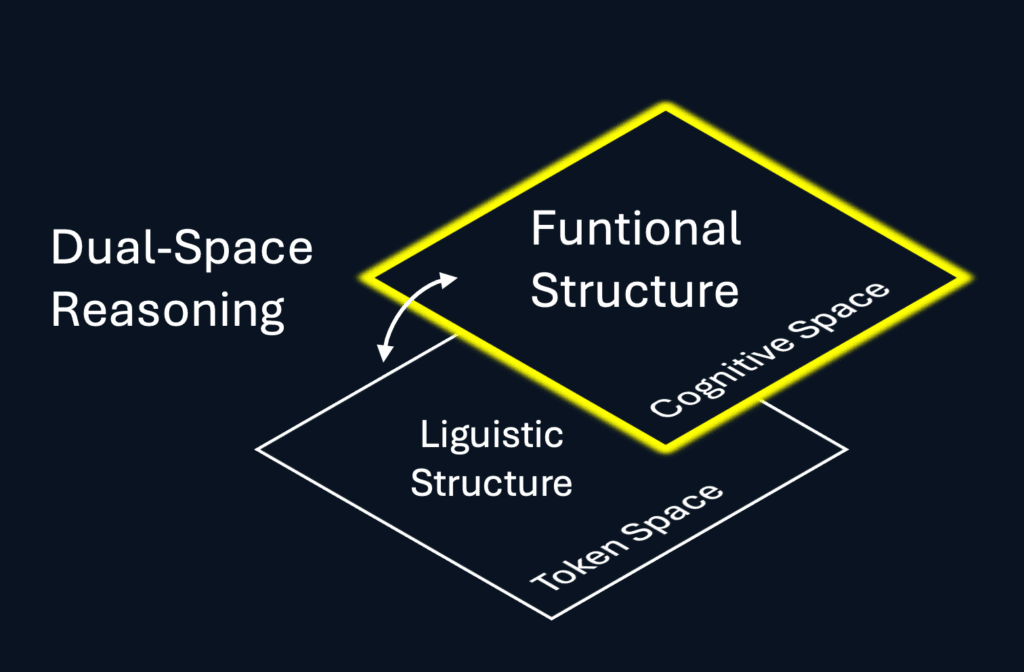

Dynamic Coupling — Dual Space

The two representation spaces have different dynamics: The token space carries linguistic creativity, while the functional space carries the structure of the reasoning process.

Both spaces cannot be viewed in isolation—they must be coupled with one another.

This is exactly where Cognitive Control comes into play: through functional sequencing, long chains of thought are stabilized.

The token space alone cannot structurally perform this task, as it possesses no concepts for functional states.

The functional layer thus opens a new dimension in AI reasoning.

In-Situ Alignment

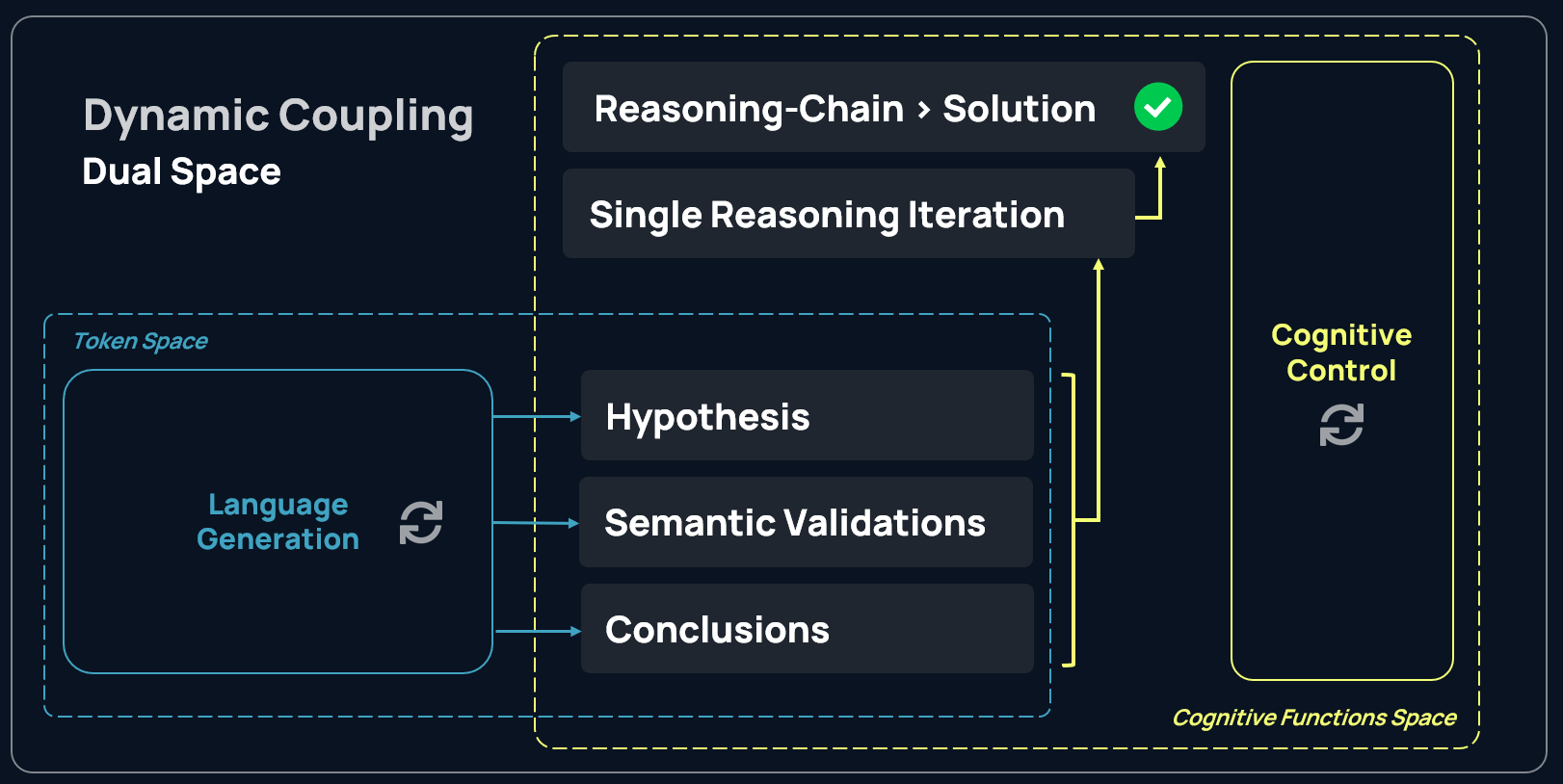

In the functional space, every reasoning step is evaluated before and after execution, not just at the end. This prevents errors from propagating unnoticed—a major problem specifically in long chains of thought.

Pre- & Post-Validation

Validations are performed at the level of intent and result. This enables alignment on different abstraction levels, which is of critical importance specifically for compliance with guidelines, regulations, and the like.

Auto-Correction, by Design

If a validation fails, the system autonomously detects an error. If correction attempts also fail, the system aborts the reasoning process, following the motto: “Better no result than a wrong result.”

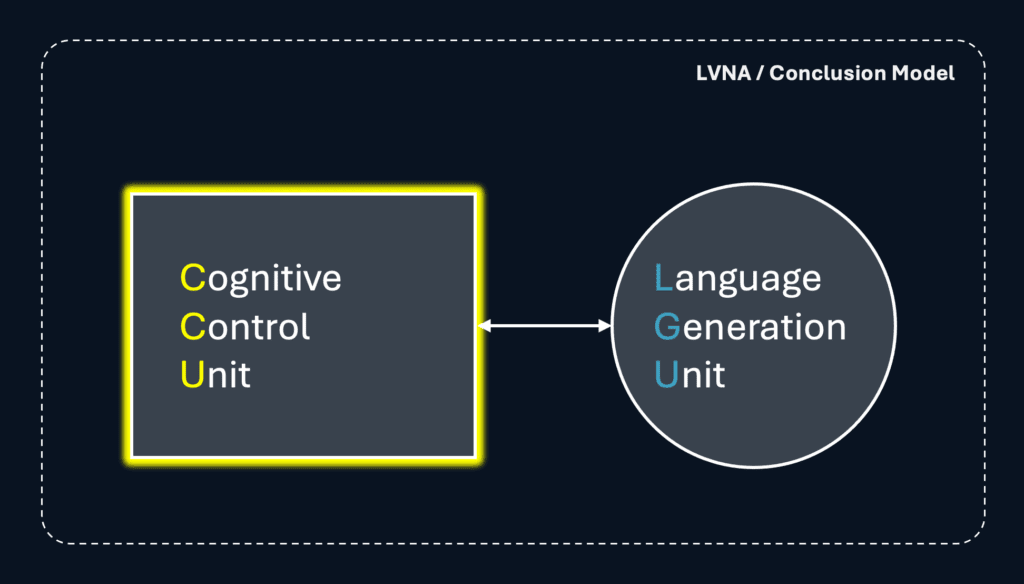

LVNA is an architecture that makes the Dual-Space approach implementable at a productive system level.

Highly abstracted, LVNA consists of two central functional blocks: a Language Generator and a Functional Control Unit. Together, they form the architecturally anchored cognitive core of CCMs.

LVNA forms the foundation of real CCM implementations—AI models that deliver high-quality results even in long chains of thought, thereby representing the basis for many high-value business applications.

From a customer value perspective, LVNA offers:

– Lower error rates with reduced need for manual correction.

– Complete transparency regarding the solution path.

– A higher degree of human trust as a foundation for rapid adoption.

The Cognitive Control Unit is the structural center of the model. It guides how thoughts are organized and how cognitive operators interact. The result: expression and purpose move forward in sync.

Reasoning needs memory that lasts. The Cognitive Register provides a stable, inspectable record of the model’s thought process — a clear view of how conclusions take shape.

LVNA strengthens reliability at scale. By separating the reasoning structure from surface generation, it enables clearer inspection and consistent operational stability — something traditional token-only models struggle to maintain.

A New Layer of Reasoning

CCMs elevate structured AI thinking to a new level regarding the depth and structure of the reasoning process itself. You have to experience it yourself to understand it.

High-Value meets High-Stake

In mission-critical business processes and/or regulated environments, “plausible sounding” answers are not sufficient. CCMs offer reproducibility, complete transparency, and full auditability.

Ready for Enterprise Use

CCMs demonstrate that the limitations of the token space can be overcome—to deliver reliable results even in long chains of thought.

A new level of AI Business Value is waiting to be unlocked. Start now!